Xuelong Sun

CONNECTING MAKES POSSIBILITY.

Associate Professor, 2024 - now

Machine Life and Intelligence research center, Guangzhou University, China

Honorary Research Fellow, 2024 - now

School of Computing and Mathematical Sciences, University of Leicester, Leicester, United Kingdom

Research Interests

Seeking in the wilderness of human knowledge

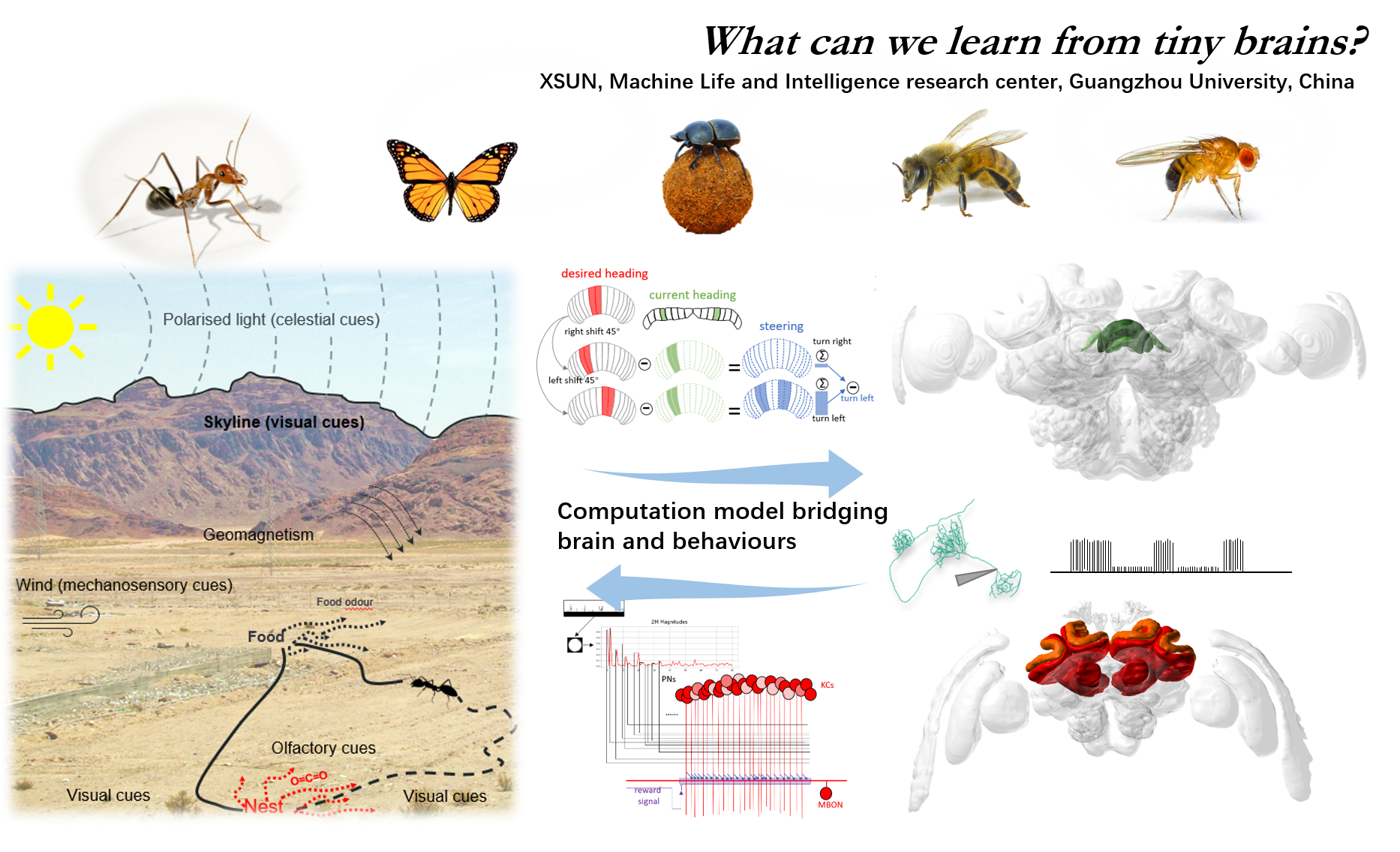

Insect Navigation

How tiny brain solves complex tasks.

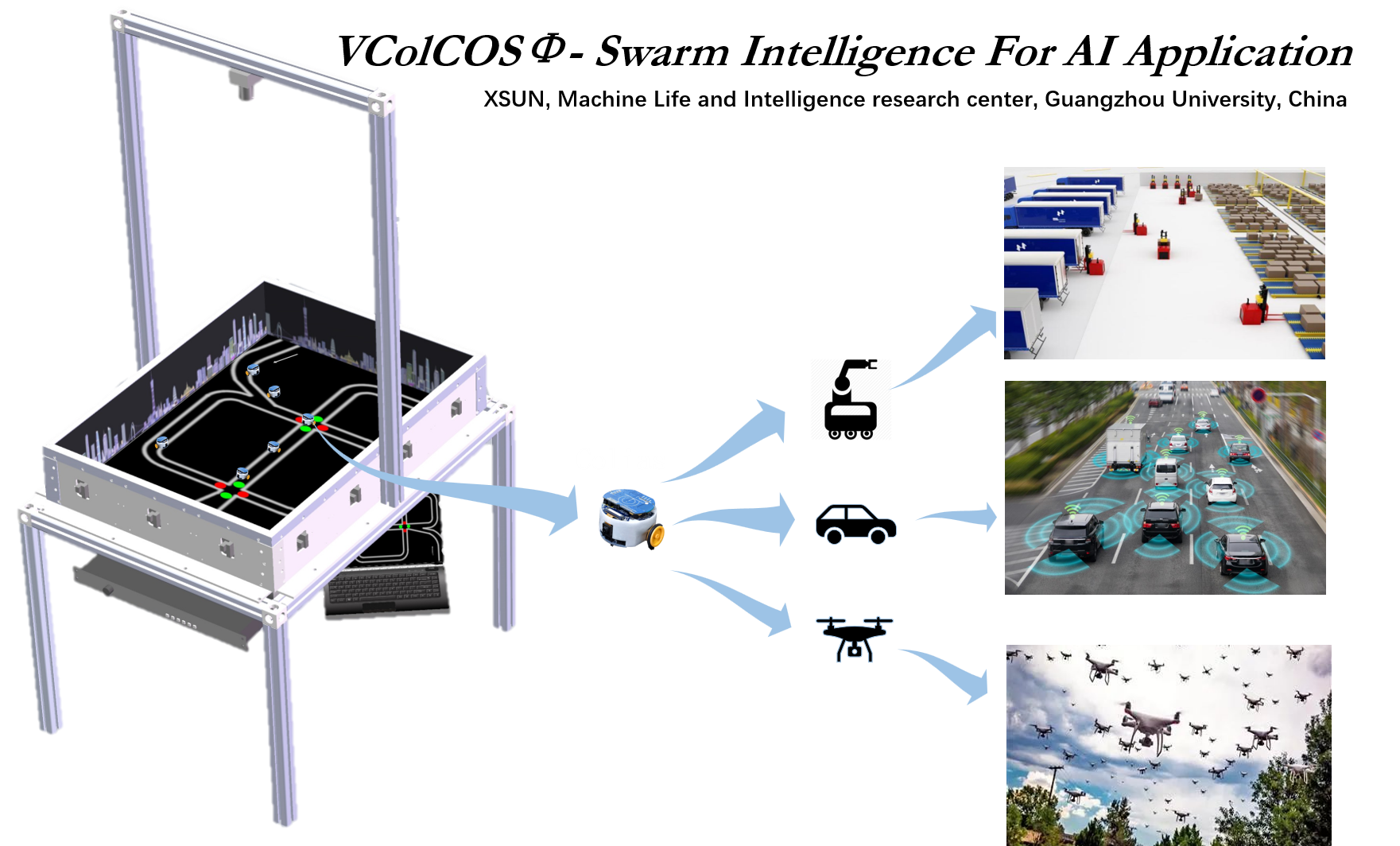

Swarm Intelligence

Intelligence emergents at the swarm level.

Bio-robotics

From robots to biology and back.

Publications

Sharing ideas with the world

Featured

|

Xuelong Sun, Shigang Yue and Michael Mangan, 2020 |

A decentralised neural model explaining optimal integration of navigational strategies in insects

We present a bio-constrained computational model that incorporates key elements for insects’ visual navigation, including frequency-encoded image processing for visual homing and route following. Our model employs a ring attractor mechanism for optimal integration of navigational cues. Additionally, we investigate the roles of the central complex (CX) and mushroom bodies (MB) in insect navigation.

|

Xuelong Sun, Shigang Yue and Michael Mangan, 2021 |

How the insect central complex could coordinate multimodal navigation

Our findings suggest that the model proposed in my previous eLife paper can be applied to elucidate the olfactory navigation abilities of insects. This further supports the notion that the central complex (CX) serves as the navigation center in insects, highlighting the presence of shared mechanisms across sensory domains and even among different insect species.

|

Xuelong Sun, Qinbing Fu, Jigen Peng and Shigang Yue, 2023 |

An insect-inspired model facilitating autonomous navigation by incorporating goal approaching and collision avoidance

We have developed a navigation model solely inspired by insects, enabling autonomous navigation in a three-dimensional (3D) environment encompassing both static and dynamic obstacles.

|

Tian Liu, Xuelong Sun, Cheng Hu, Qingbing Fu and Shigang Yue, 2021 |

A Versatile Vision-Pheromone-Communication Platform for Swarm Robotics

We are pleased to announce the successful implementation of a dynamic changeable wall in the arena, marking a significant advancement in our research. This addition introduces a dynamic element, enabling versatile and realistic experiments. The ability to modify the wall configuration opens new possibilities for studying diverse scenarios and investigating the impact of dynamic obstacles on behavior. This breakthrough underscores the dynamic nature of our research journey and our commitment to innovative ideas in our field.

|

Xuelong Sun, Cheng Hu, Tian Liu, Shigang Yue, Jigen Peng and Qinbing Fu, 2023 |

Translating Virtual Prey-Predator Interaction to Real-World Robotic Environments: Enabling Multimodal Sensing and Evolutionary Dynamics

We have designed and implemented a prey-predator interaction scenario that incorporates visual and olfactory sensory cues not only in computer simulations but also in a real multi-robot system. Observed emergent spatial-temporal dynamics demonstrate successful transitioning of investigating prey-predator interactions from virtual simulations to the tangible world. It highlights the potential of multi-robotics approaches for studying prey-predator interactions and lays the groundwork for future investigations involving multi-modal sensory processing while considering real-world constraints.

|

Xuelong Sun, Michael Mangan, Jigen Peng and Shigang Yue, 2025 |

I2Bot: an open-source tool for multimodal and embodied simulation of insect navigation

We have developed a open source platform for simulating embodied insect navigation behaviors based on Webots. We hope this platform. By integrating gait controllers and computational models into I2Bot, we have implemented classical embodied navigation behaviours and revealed some fundamental navigation principles. By open-sourcing I2Bot, we aim to accelerate the understanding of insect intelligence and foster advances in the development of autonomous robotic systems.

Others

|

Xuelong Sun, Tian Liu, Cheng Hu, Qingbing Fu and Shigang Yue, 2019 |

ColCOS φ: A multiple pheromone communication system for swarm robotics and social insects research

We have developed an optically emulated pheromone communication platform inspired by the multifaceted pheromone communication observed in ants. This innovative platform not only replicates the pheromone-based communication system but also enables the localization of multiple robots using vision-based techniques.

|

Xuelong Sun, Shigang Yue and Michael Mangan, 2018 |

An analysis of a ring attractor model for cue integration

This research reveals that the classical ring-attractor network can achieve optimal integration of multiple direction cues without requiring neural plasticity. This finding serves as the foundation for my forthcoming model of insect navigation

|

Tian Liu, Xuelong Sun, Cheng Hu, Qingbing Fu and Shigang Yue, 2021 |

A Multiple Pheromone Communication System for Swarm Intelligence

we expand upon our previous work presented at ICARM2019 by conducting a thorough analysis and investigation of our platform. Building on our foundation, we systematically examine and organize the results, offering a deeper understanding of its capabilities and performance. This extended study enhances the significance and relevance of our research.

|

Qinbing Fu, Xuelong Sun, Tian Liu, Cheng Hu and Shigang Yue, 2021 |

Robustness of Bio-Inspired Visual Systems for Collision Prediction in Critical Robot Traffic

We employed the ColCOSP pheromone communication system to simulate city traffic, creating a unique and cost-effective robotic approach to evaluate online visual systems in dynamic scenes. This methodology enabled us to investigate the LGMD model in a novel and manageable manner.

|

Jialang Hong, Xuelong Sun, Jigen Peng and Qinbing Fu, 2024 |

A Bio-Inspired Probabilistic Neural Network Model for Noise-Resistant Collision Perception

We embedded Probabilistic Module into LGMD models and found great performance. This study showcases a straightforward yet effective approach to enhance collision perception in noisy environments.

|

Hao Chen, Xuelong Sun, Cheng Hu, Hongxin Wang and Jigen Peng, 2024 |

Unveiling the power of Haar frequency domain: Advancing small target motion detection in dim light

We integrated visual information in spatiotemporal windows regulated by frequency parameters of Haar wavelets and effectively discriminates the small target motion from the disturbance of random noise caused by dim light.

|

Yani Chen, Lin Zhang, Hao Chen, Xuelong Sun, Jigen Peng, 2024 |

Synaptic ring attractor: A unified framework for attractor dynamics and multiple cues integration

We built a unified network based on ring attractor with simple synaptic computing that could account for many neural dynamics observed in animal brains especially that in insect heading direction system of cue integrating.

Experiences

Chrishing every moment in my life

2021 - 2024:Postdoc, Guangzhou University, China

2023 - 2024:Honorary Research Fellow, University of Leicester, UK

2021 - 2023:Honorary Research Fellow, University of Lincoln, UK

2016 - 2021: PhD students, University of Lincoln, UK

- 2017 - 2018: Visiting Scholar, Tsinghua University, China

- 2019 - 2020: Visiting Scholar, Guangzhou University, China

2012 - 2016: Undergraduate, Chongqing University, China

2009 - 2012: High School, No.2 High School of Huainan, Anhui Province, China

Skills

Learning is the one of the happniest thing in the world

Programing

Python, C, HTML/CSS/JS

Language

English, Chinese, Japanese

Softwares

Blender, Webots, PR, Office, Inkscape